Agents Aren't so Hard: GPT Newspaper Explained

GPT Newspaper is a project that came out earlier this year which uses agents to build customized newspapers. Building an agent which autonomously completes a task like this sounds daunting. I want to go through the code and show why its not.

The content below is adapted from a thread I posted on X.

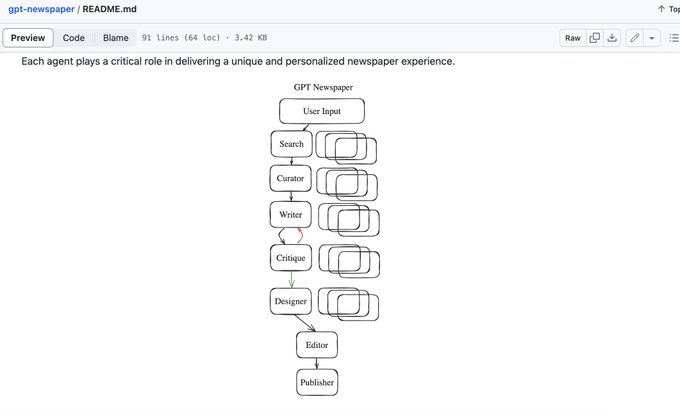

From the repo, which is open source, we can see exactly what the agent is doing:

The authors refer to each one of these steps as an Agent. But we can really just think about each as a thing that performs a specific tasks, often using an LLM call.

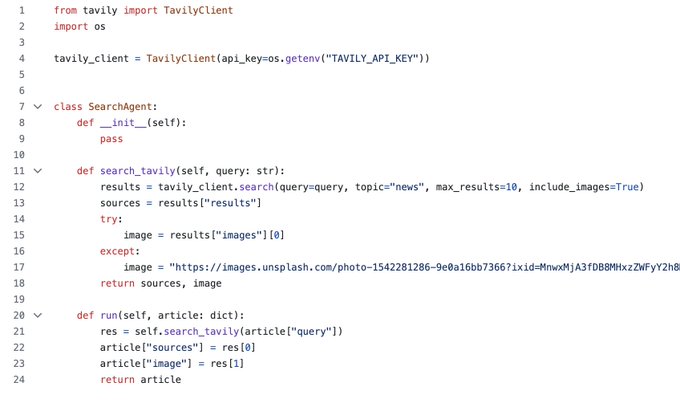

Let's look at the first step, search. It's just a simple python class with a method which uses an API to get some search results.

While the subsequent agents start utilizing the language model, they're not much more complex. All are just python classes with one or two methods. Curator just asks the model to filter down the results to the top 5 most relevant and return their urls.

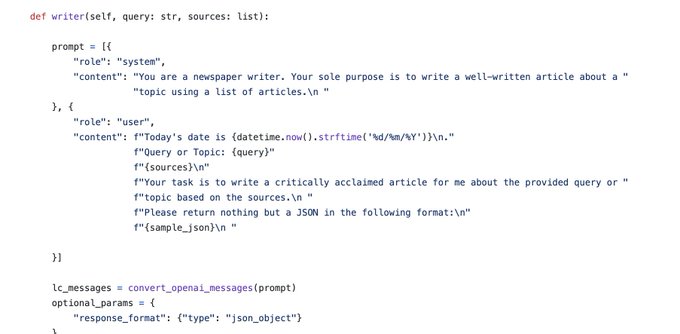

Writer, for example, basically just asks the model to write an article given the text of a few sources from the search step.

You can dive into each of the steps, or agents, in the repo. For the most part, they all perform simple tasks. There is one part that is slightly more complicated: a loop between Writer and Critique. This is the type of thing that really makes agents interesting and which enables things like agents which can write code for entire features. But how does it work?

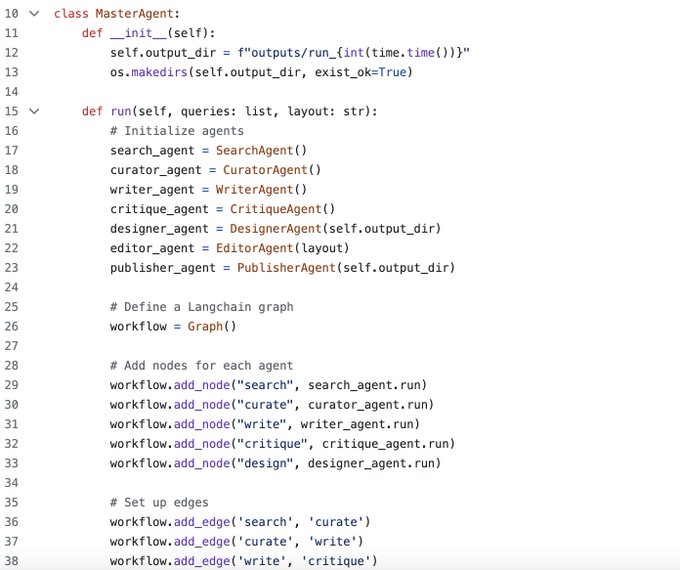

GPT Writer uses a tool from LangChain called LangGraph to do this. LangGraph allows us to define the order of execution for these steps.

Critically, it allows the developer to define conditional edges. This allows gpt-newspaper to refine the article with Writer if Critique does not pass.

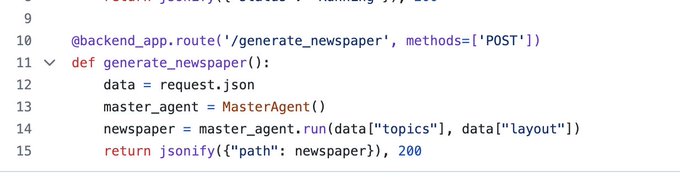

Lastly, we just kick off the graph run and now you have an agent which can go off an autonomously complete a multistep task. In this case, it builds a customized newspaper.

The key point is that really all we're doing here is defining a flow of steps and maybe some conditional loops. And each step generally consists of pretty simple LLM calls. But by putting all these simple things together we can build something really cool.

There are so many use cases for basic agent workflows like this. For example: I could modify this to build a tool to turn this twitter thread into a blog post. Or to run a diligence report on a startup. Or to research a given person.

Agent AI is building an platform and "professional network" for agents just like this. Following this pattern you can build the exact types of tools that are on there. Platforms like Lindy AI are allowing consumers to build their own more advanced versions of workflows like this. Two things are true: these tools are magic, but they're also just well orchestrated LLM calls.

Here's the repo for GPT Newspaper by Rotem Weiss: